“The thing”: An analysis of a weird classic Mac OS bug [Part 1]

A long time ago, I read this article (in French) written by an ex-Apple support technician. It documented a weird bug that occurred in some versions of “classic” Mac OS (pre-Mac OS X) and had some interesting consequences. The author nicknamed the bug “The thing”, in reference to the 1982 film of the same name.

For some reason (probably because of the weirdness of the bug), I remembered this article for all these years, and I recently decided to investigate now that I know a bit more how computers work ;)

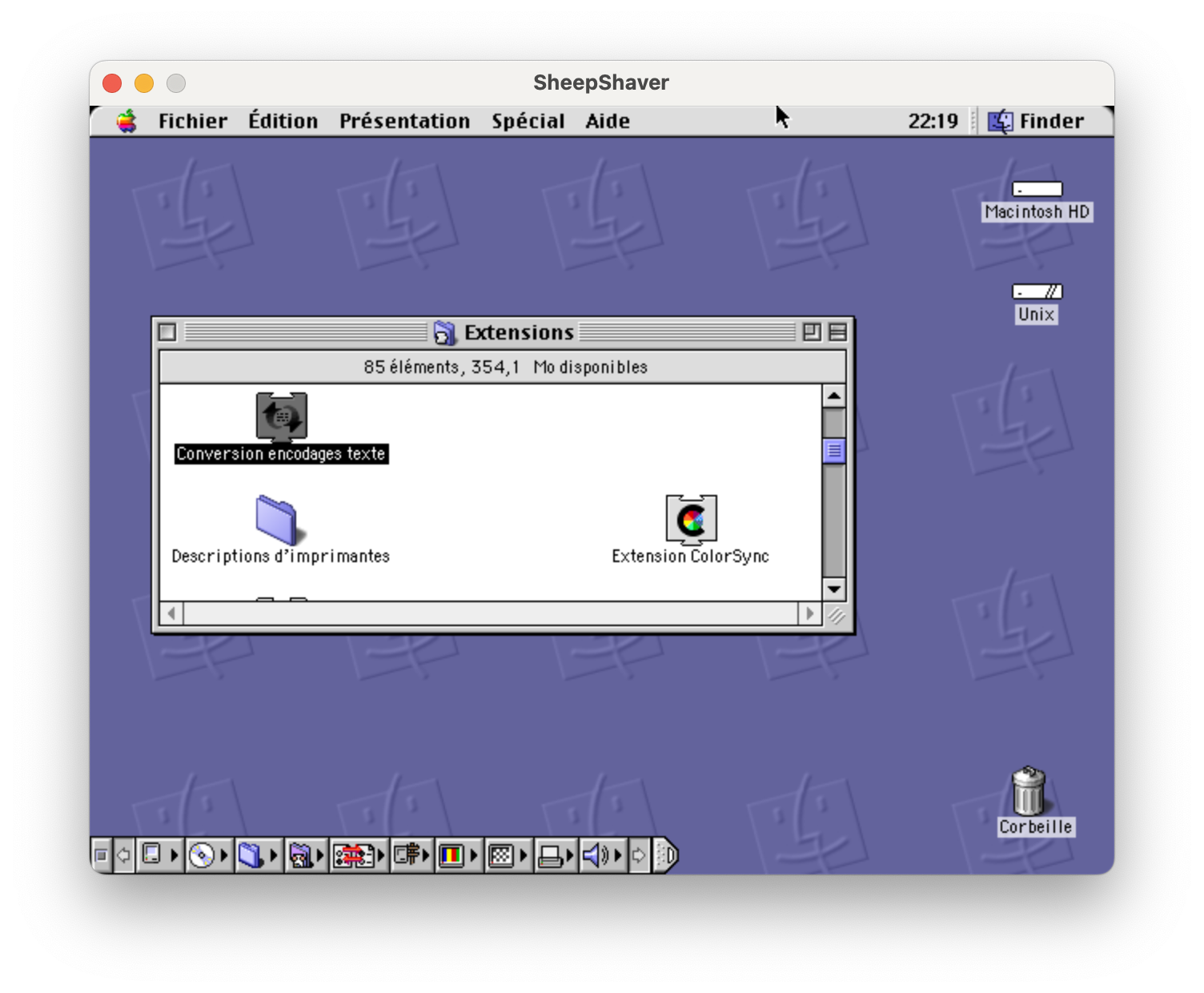

First, let’s set up the scene: Mac OS 8.5 running in the SheepShaver emulator. You will notice that I’m using the French version of Mac OS, and there is a reason for that. I’ll try to provide translations where needed.

For those who have never seen it, this is what classic Mac OS looked like. It’s not that different from modern Mac OS (now spelled macOS): you have a title bar at the top, showing the menus for the currently focused application and a clock, the desktop with the hard drive and some icons, the Trash at the bottom right corner of the screen (part of the desktop)… There is no Dock, instead you can put often-used applications in the Apple menu, and the menu in the top right is used to switch between running applications. The icon bar at the bottom left is called the Control Strip and provides access to commonly-used system settings like volume control (same thing as the icons on the right side of the menu bar in modern macOS).

Unlike Mac OS X, classic Mac OS is not based on Unix and organizes its files very differently:

- There is no root volume (/); instead each volume is its own root, in a way.

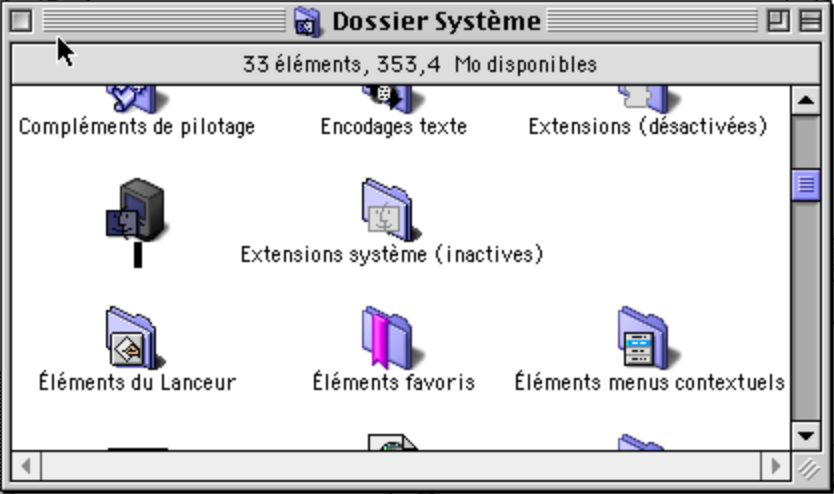

- The system disk (called here Macintosh HD but can have any name) has a folder called “System Folder” (Dossier Système in the French version) which contains most of the files needed for the system to function. Applications and user files can be put wherever on the disk (although in later versions of Mac OS it was standard practice to put applications in an Applications folders at the root of the disk).

- The System Folder contains various system files, most notably the System file (which hold most system code and resources) and the Finder (which is the same thing as the Finder in current Mac OS). It also contains folders, like Preferences (Préférences, which holds settings files for the system and other applications) and Extensions.

- The Extensions folder contains files that are loaded at system startup and can extend the system in various ways (like to provide support for additional hardware, additional APIs for applications to use, or system customization).

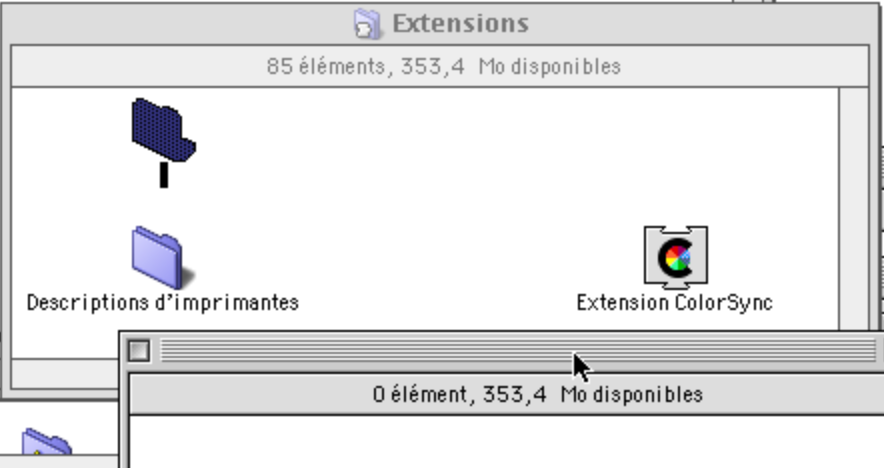

The above capture shows the Extensions folder and the Conversion encodages texte (Text Encoding Converter) extension. From what I can tell, this extension provides APIs to convert between text encodings. At this time, Unicode was relatively new, and OSes manipulated text using various incompatible encodings (in Europe, Mac OS used MacRoman and Windows used CP-1252).

Now, I’m going to drag this extension to the desktop, which will effectively disable it, and reboot. Since this is an extension, not a critical system file, the system should work fine without it. After all, pressing Shift at startup disables all extensions (so you can recover from a crashing extension), so disabling one of them should be fine, right?

… what.

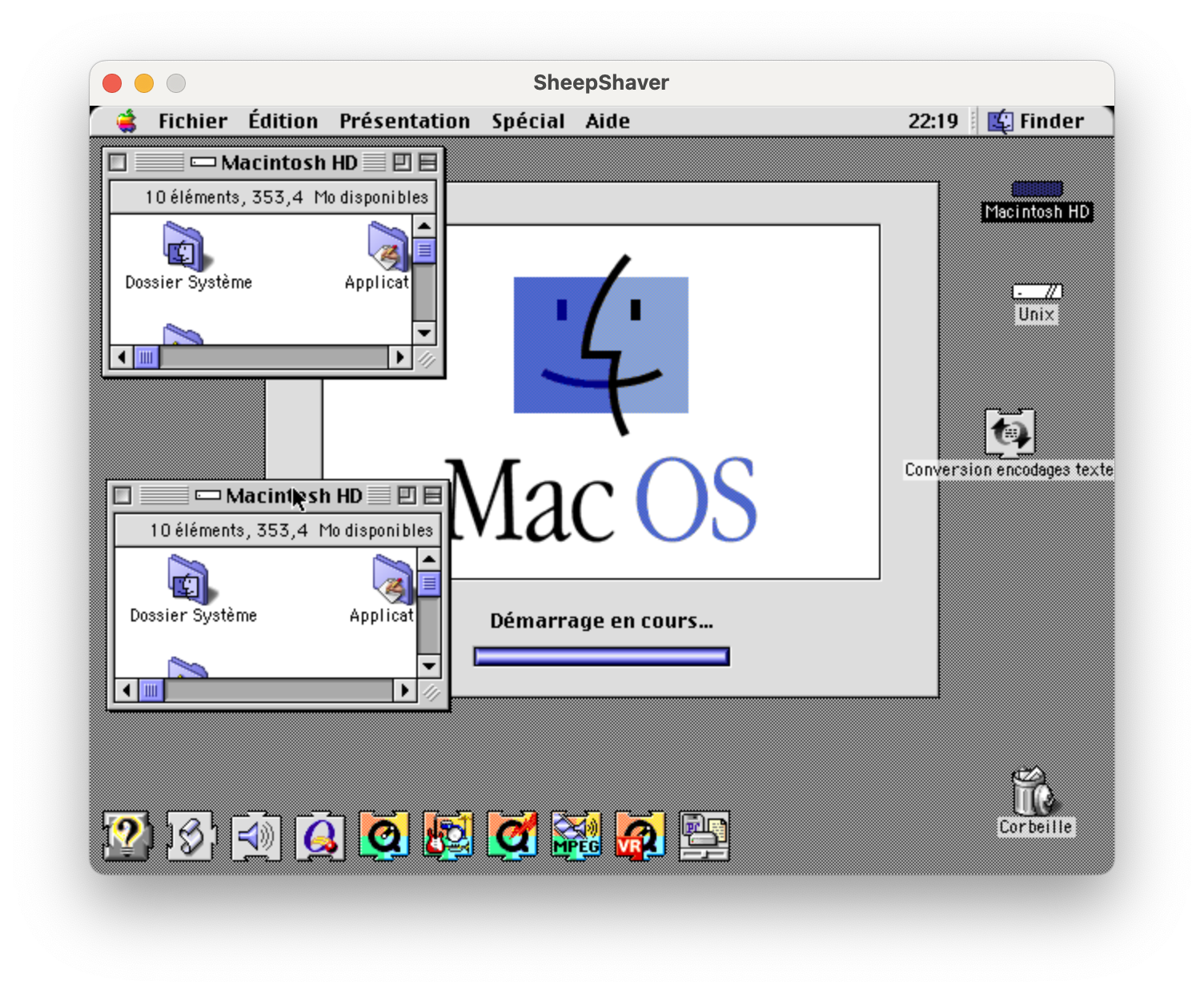

You may notice several things:

- The desktop background is grey, there is a large “Mac OS” window with a progress bar, and a row of icons on the bottom left of the screen. What you’re seeing is the Mac OS boot screen.

- The “Macintosh HD” window seems to be opened twice. The classic Mac OS Finder does not allow that; what actually happened is that I opened the window and then dragged it to a different location.

What seem to be happening is that the system is failing to paint the background image. The boot screen and the phantom Finder window are just remnants of video memory that should have been replaced with the background, but weren’t.

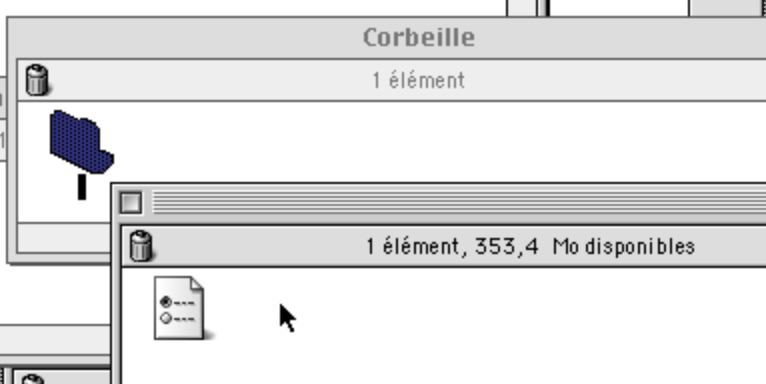

Another thing: the Control Strip is not displayed. And the Trash icon indicates that there is something in it, although it was empty before rebooting. What is there?

That’s a folder, with an empty name, containing a file (looking like a preference file), also with an empty name. Empty names are not a thing on classic Mac OS; you can’t create a file named “.txt” and hide the extension, since there is no way to hide extensions in the first place (file extensions were not really used by Mac OS at that time).

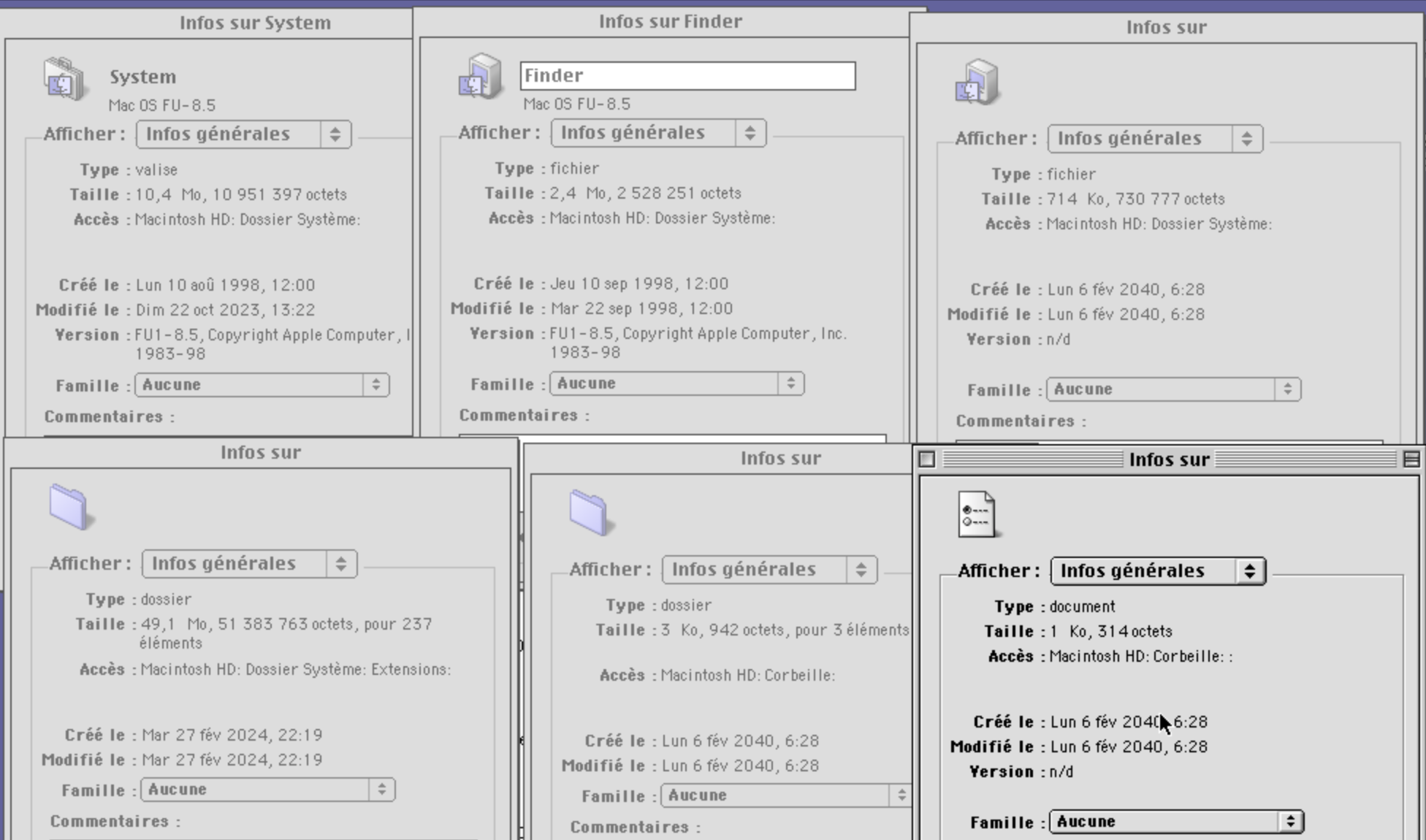

So this is very weird and smells like a filesystem corruption. There is also an unnamed file in the System Folder, and an unnamed folder in the Extensions folder:

Okay, this is starting to look too much like a creepypasta. Let’s put the extension back in its place and reboot. That will fix things, right? Well, it fixes the desktop background and the Control Strip, but the unnamed files are still there.

Maybe we can drop all these files into the Trash and empty it. Well, doing this in SheepShaver crashes the emulator. I’m tempted to believe that on a real Mac, it would crash either the Finder or the entire system. (I’ve checked that dragging other files and folders work fine.)

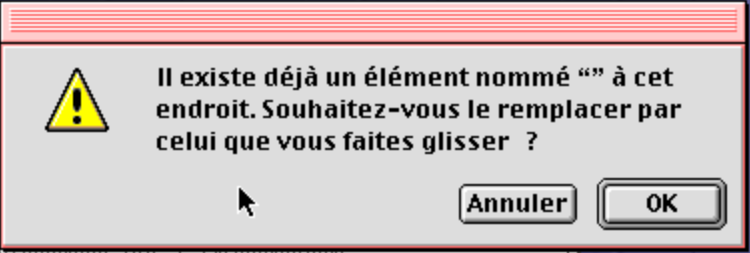

Let’s try to drop the files into a regular folder, instead of the Trash. I’m sure this is going to go well.

It says that the destination folder already contains an item with no name, that is going to be replaced. This is not going to end well, but let’s continue anyway.

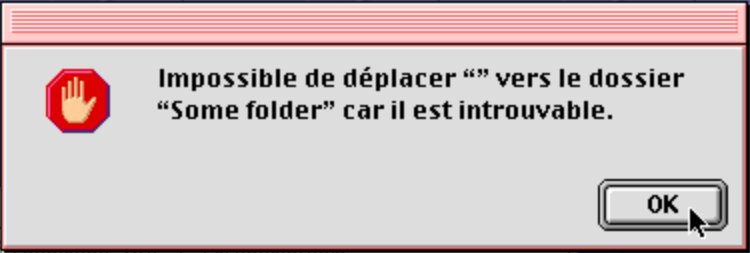

The move failed because it cannot find the destination folder. Clicking OK reveals that the destination folder has indeed disappeared, and its contents with it. Oops.

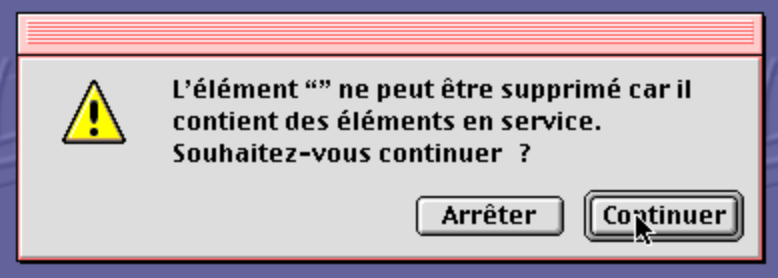

Maybe we can at least get rid of the folder and the file in the Trash by emptying it?

The message says that an item with no name cannot be deleted because it contains items “in use”. This usually means that an application has opened the file. I’m not sure that’s true, but that will prevent the Finder from deleting the file.

What about fixing the empty names, by renaming the files? Attempting to rename the files by clicking on their names or selecting them and pressing Return fails: nothing happens. Opening their Information window does not help: the normally editable filename field is not editable there.

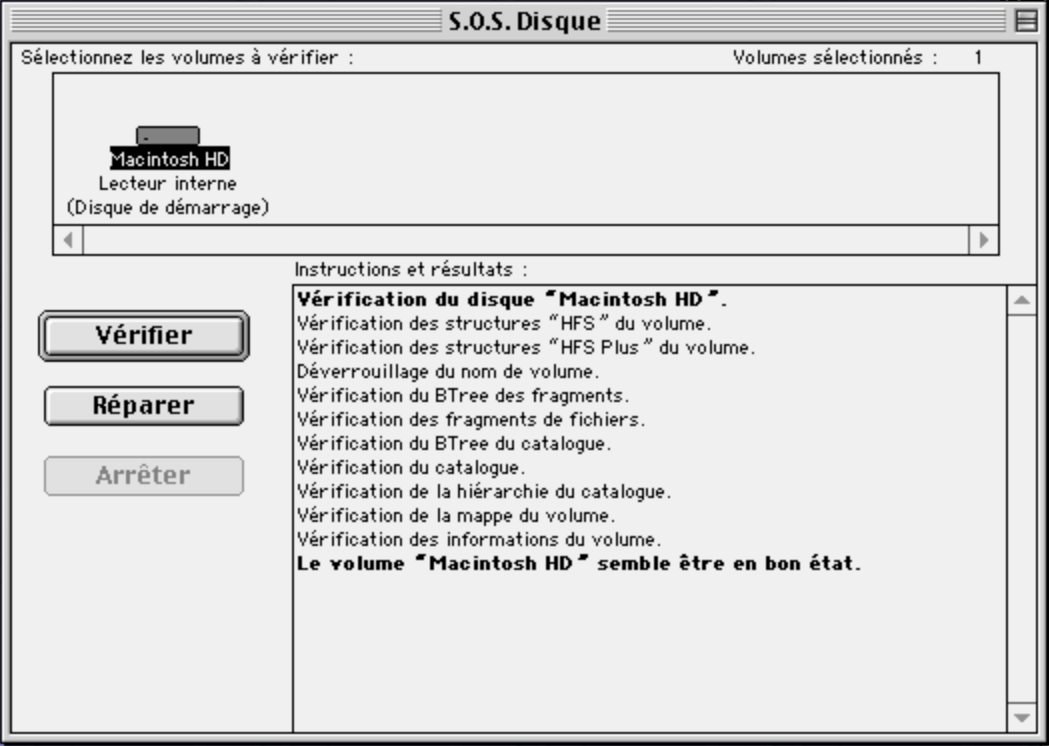

Since this looks like filesystem corruption, let’s run the built-in filesystem checker (Disk First Aid, S.O.S Disque). And…

It finds nothing!

(I’ve also tried Norton Disk Doctor, but it hangs at startup, probably because of an issue with the emulator. Apparently, on a real Mac, it would find a weird error on the filesystem but fail to correct it.)

Okay, all of this is very weird, but you know what’s weirder? How specific this bug is. It only happens if the following conditions are met:

- Mac OS version strictly higher than 8.1 (actually Mac OS 8.1 also does weird things if the extension is removed but does not create nameless files and folders) and strictly lower than 9.0. (so basically Mac OS 8.5, 8.5.1 and 8.6, unless I’m missing something)

- Mac OS is installed on a volume using the HFS+ (Mac OS Extended) filesystem. If it’s installed on an HFS (Mac OS Standard) filesystem, the bug does not occur.

- A localized version of Mac OS is used. It happens with the French version of Mac OS (and probably with some other localized versions) but not with the US version.

So what is going on? How can removing an extension create weird glitches and corrupt the file system in a way that is not detectable by some disk checkers? In the next post, I’ll do some filesystem inspection do try do determine what’s gone wrong. We’ll learn some things about HFS+ along the way.